Change your life by

managing your money better.

Subscribe to our free weekly newsletter by entering your email address below.

Subscribe to our free weekly newsletter by entering your email address below.

Money-Guy 01-21-2011

…but I’m never wrong! Or at least that is how the old adage goes. This may not be the exact mantra of modern day economic “prophets”, but it isn’t too far off. It seems like (and the study we share proves) a forecaster only has to get one big prediction right and then their word is as good as gold.

Today’s show is based on an article in the Boston Globe titled “That Guy Who Called the Big One? Don’t Listen to Him”. This article highlights how “Dr. Doom” (Nouriel Roubini) correctly predicted the 2007-2009 economic downturn. As you can imagine, predicting the largest economic downturn since the Great Depression is a pretty noteworthy accomplishment. Therefore, you would assume someone with that much economic knowledge and foresight is likely to have had a pretty impressive track record. Well, according to Joe Keohane, that isn’t always the case.

Mr. Keohane went back and compiled some of Dr. Roubini’s past predictions and found that he was quite often wrong. The article notes that in 2008 he predicted ‘hundreds of hedge funds were on the verge of failure and that the government would have to close the markets for a week or two in the coming days to cope with the shock’. Obviously he missed the mark on this one. In 2009 he was convinced that oil prices would stay below $40 and that car companies should begin increasing production on SUVs. As time has shown, quite the opposite happened. His final recent prediction was that the S&P 500 would fall below 600 in 2009, a year when that particular index actually returned 23.5%.

What does Mr. Keohane attribute this lack of consistency to? Quite simply put:

“The people who successfully predict extreme events, and are duly garlanded with accolades, big book sales, and lucrative speaking engagements, don’t do so because their judgment is so sharp. The do it because it’s so bad.”

Two economists, Jerker Denrell and Christina Fang, decided to do a study to determine if, in fact, there really are people who are better at predicting the future than others. As you listen to the show, we share how they actually conducted their research. What they found was that economists who had a better record at calling extreme events actually had a worse record overall. We go on in the show to explain how these results don’t only appear in economic forecasting, but also manifest in weather and political forecasting.

As you listen, we explain other findings by Denrell and Fang and touch on why and how our judgment can often be warped by our bias toward success. We go on to share a listener email and provide what we feel is a unique insight on how your current housing situation may not truly be as bad as it seems, and even though it may not be obvious, there are ways to potentially “turn your lemon into lemonade”.

To close out the show, we share a fun article in Consumer Reports titled “Save by Cutting Waste”. Well, tightwad Tod, you have even outdone the Money-Guys. Listen out for ways to save on: bananas, bar soap, condiments, cookies, cornflakes, hair gel, honey, laundry detergent, pump-top hand lotion, shampoo, sugar, and toothpaste.

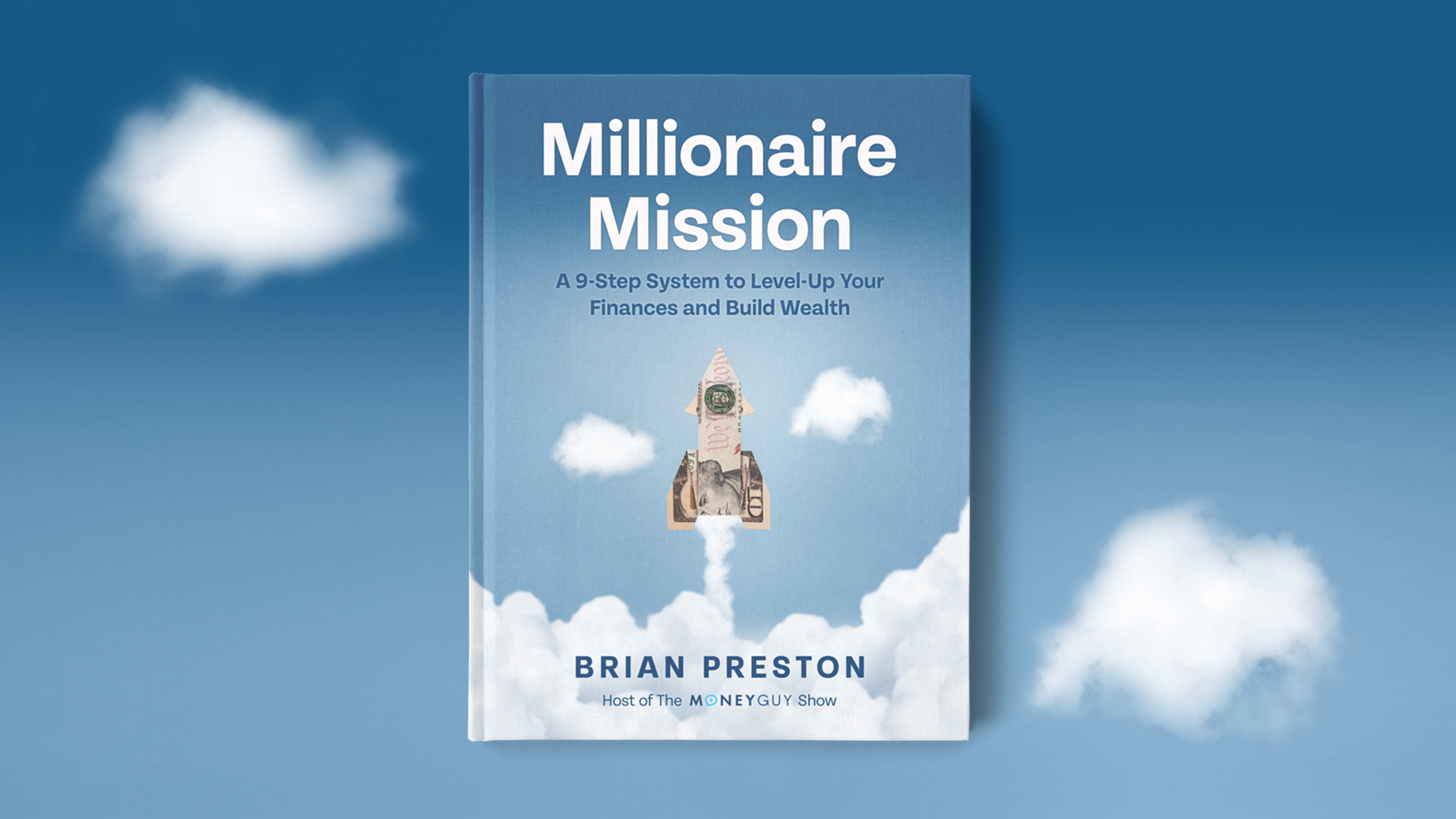

Financial Order of Operations®: Maximize Your Army of Dollar Bills!

Here are the 9 steps you’ve been waiting for Building wealth is simple when you know what to do and…

View Resource

Tax Guide 2023

Updated for 2023! Ever wonder what the highly trained professionals use to tax plan? Wonder no more! We’ve assembled the…

View Resource

Financial Order of Operations®: Maximize Your Army of Dollar Bills!

Here are the 9 steps you’ve been waiting for Building wealth is simple when you know what to do and…

View Resource

Is a High Yield Savings Account Still the Best Place to Keep an Emergency Fund?

Read MoreAre We Financial Misers for Investing 60% of Income in Your Mid-20s?

Read MoreIs Cash Now Trash Again? Federal Reserve Signals Intent to Lower Rates

Read More

How about more sense and more money?

Check for blindspots and shift into the financial fast-lane. Join a community of like minded Financial Mutants as we accelerate our wealth building process and have fun while doing it.

It's like finding some change in the couch cushions.

Watch or listen every week to learn and apply financial strategies to grow your wealth and live your best life.

Subscribe to our free weekly newsletter by entering your email address below.